AWS Lambda Python List and POST to Append CSV File in S3

What we are trying to build here is

- AWS Lambda function #1

Accept POST then write to S3 file - AWS Lambda function #2

Accept GET list content in such S3 file

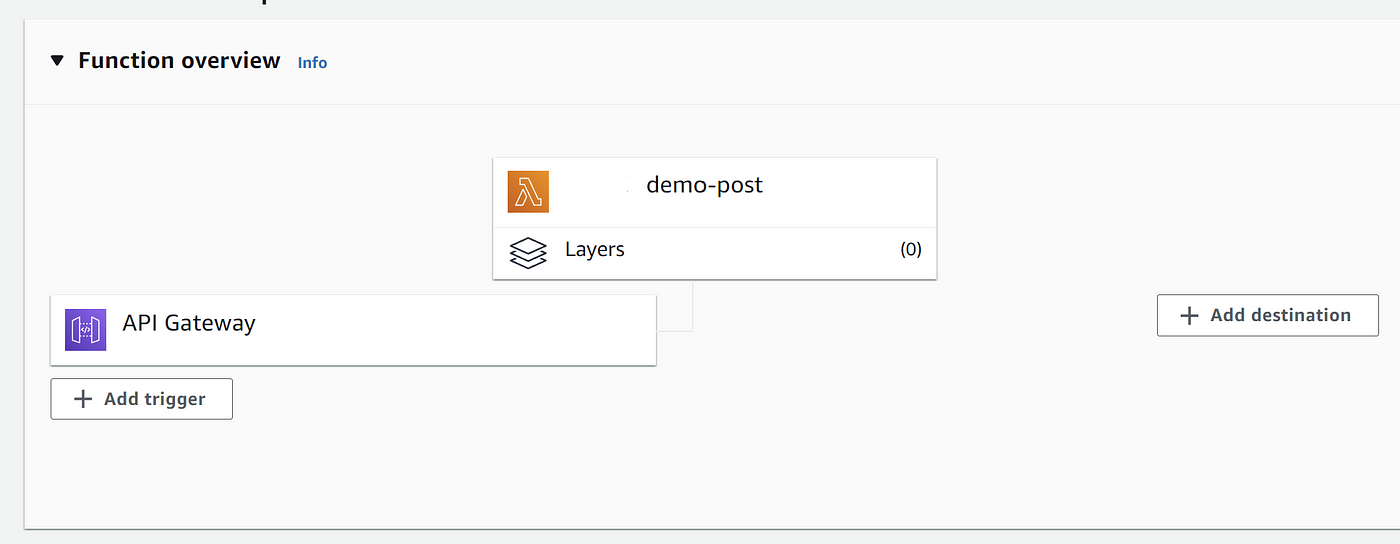

1. Create Lambda

Go to you AWS console then create the Lambda function

Then link the trigger to the API Gateway

Note that if you don’t specific something AWS help you create this new Lambda role for you

Role works like a user.

2. Then create the S3

Go to console to create the bucket

Then create the local folder then sync upward to the S3

We will code the Lambda to read / write this file in the next step

mkdir -p my-demo/sample

echo 'one,two,three' > my-demo/sample/output.csv

aws s3 sync . s3://my-demo

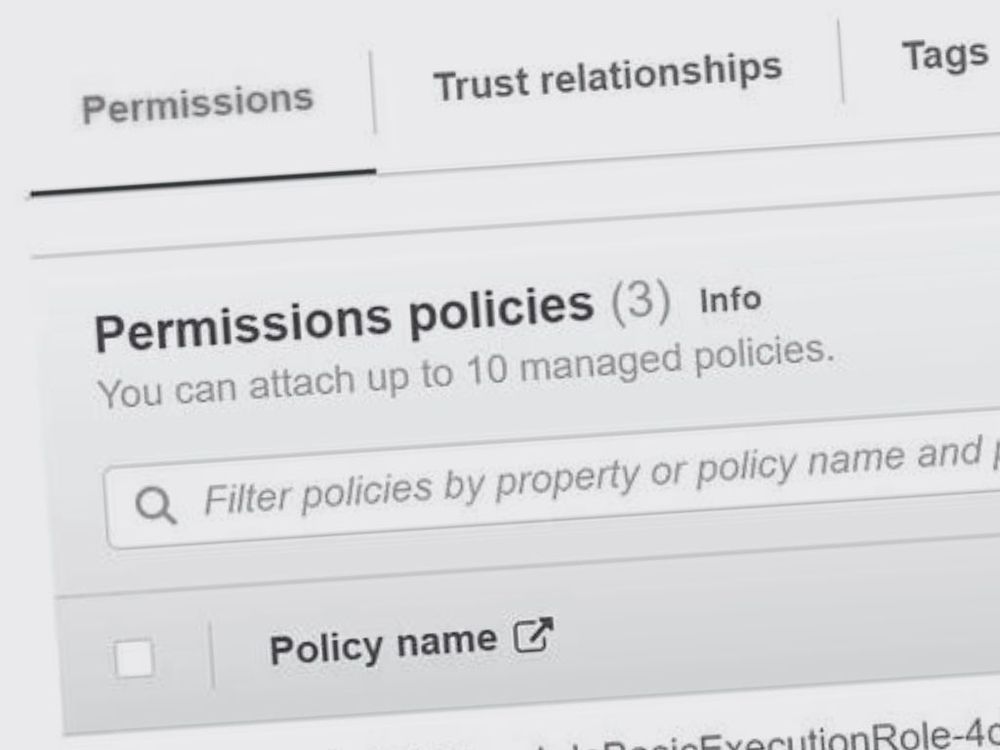

3. Some config you need for permission

Then you need to add some permission to make Lambda able to access the file in S3.

For the sake of demo, we just dangerously add AmazonS3FullAccess hereFor real implementation, you better add more granularity of access here by edit the JSON policy and attach to it.

4. Lambda Method 1: Dump all

We will use built-in boto3 Python package here.

The boto3 is AWS maintained Python package syntax of upload/download file are:

s3 = boto3.client('s3')

s3.download_file(bucket, object_name, file_name)

s3.upload_file(file_name, bucket, object_name)

Then we write the Lambda function

import json

import boto3

import csv

BUCKET_NAME = 'my-demo'

OBJECT_NAME = 'sample/output.csv'

LAMBDA_LOCAL_TMP_FILE = '/tmp/test.csv'

def lambda_handler(event, context):

# TODO implement

s3 = boto3.client('s3')

s3.download_file(BUCKET_NAME, OBJECT_NAME, LAMBDA_LOCAL_TMP_FILE)

# ONLY '/tmp' on Lambda is WRITABLE

# Otherwise you will got "Read-only file system"

fields=['first','second','third']

with open(LAMBDA_LOCAL_TMP_FILE,'r') as infile:

reader = list(csv.reader(infile))

return {

'statusCode': 200,

'body': json.dumps(reader)

}5. Lambda Method 2: Append file

import json

import boto3

import csv

BUCKET_NAME = 'my-demo'

OBJECT_NAME = 'sample/output.csv'

LAMBDA_LOCAL_TMP_FILE = '/tmp/test.csv'

def lambda_handler(event, context):

# TODO implement

s3 = boto3.client('s3')

s3.download_file(BUCKET_NAME, OBJECT_NAME, LAMBDA_LOCAL_TMP_FILE)

# ONLY '/tmp' on Lambda is WRITABLE

# Otherwise you will got "Read-only file system"

print(event['body'])

body = json.loads(event['body'])

row = [body['first_name'], body['client_id'], body['country']]

with open(LAMBDA_LOCAL_TMP_FILE, 'a') as f:

writer = csv.writer(f)

writer.writerow(row)

# upload file from tmp to s3 OBJECT_NAME

s3.upload_file(LAMBDA_LOCAL_TMP_FILE, BUCKET_NAME, OBJECT_NAME)

return {

'statusCode': 200,

'body': json.dumps('success')

}6. Some Convenient during Dev time

You might need to reset the file you can do it like so

aws s3 sync . s3://my-demo

Common bug

Error “Read-only file system” in AWS Lambda when downloading a file from S3

When you are in lambda, you must only write to /tmp/<your_file_name>

string indices must be integers

Lambda proxy integration type don’t help you parse the JSON

"body:

"{\"first_name\":\"john\",\"client_id\":\"12345\",\"country\":\"japan\"}"

Hope this help !